Revolutionized is reader-supported. When you buy through links on our site, we may earn an affiliate commision. Learn more here.

Many would argue that artificial intelligence, much like robotics, lacks emotional capacity. However, voice recognition has advanced significantly in recent years, evolving from simply processing commands to detecting, interpreting and even responding to subtle emotional cues in human speech.

While affective computing might sound like something out of Black Mirror, emotion detection is advantageous in improving voice-based interactions. Voice assistants have transformed into emotionally responsive agents, largely due to the integration of emotional AI. This technology bridges the gap between purely objective automation and intuitive, human-centric computing.

The human element in AI is rooted in the technological and scientific principles that guide its development and application.

Advanced algorithms scour diverse inputs to detect emotions, including facial expressions, vocal tone, written text and physiological metrics like heart rate. Micro-expressions, for example, are used to identify emotions such as happiness or anger. Today’s well-trained AI models can detect up to seven facial emotions with an accuracy rate of 96%. Voice analysis, on the other hand, focuses on pitch, tone, speed and volume to determine emotional states.

Both spoken and written language offer a wealth of data for natural language processing (NLP)

which evaluates emotional tone and sentiment by examining word choice, punctuation and speech patterns. Sentiment analysis, also known as opinion mining, processes large volumes of text to classify content as positive, negative or neutral. This classification informs how AI systems generate emotionally appropriate responses.

As AI systems are continually used and trained on large datasets, they learn to identify patterns in emotional signals with greater sensibility and accuracy. A newer approach based on reinforcement learning treats emotions as dynamic patterns shaped by recent rewards, future expectations and perceived environmental changes. This mirrors how living beings interpret the world.

In one case study, an AI system independently learned and identified eight basic emotional patterns. Each aligned with natural human emotions and appropriate to specific situations. These AI agents were capable of self-directed learning but could also generate synthetic emotions that closely mimic human affect. Instead of relying solely on hard-coded or case-specific rules, machine learning enables AI to develop an internal emotional spectrum shaped by experience and interaction.

Another study using Mel-Frequency Cepstral Coefficients (MFCCs) found that training over 1,000 epochs led to high accuracy in detecting emotions from voice. The system extracted subtle, indirect emotional cues from speech by converting sound waves into representations of short-term energy patterns. This exemplifies that the greater the AI’s exposure to varied emotional expressions, the more effectively it learns to recognize them over time.

Emotion-aware systems are making waves in various industries as they change how computers respond to human customers in more emotionally sensitive ways.

Remote consultations expanded rapidly with the onset of the COVID-19 pandemic and have remained a popular option in healthcare due to their convenience and high patient satisfaction, especially because they eliminate the need for in-person visits.

Today, teleconsultation extends to mental health assessments and has advanced significantly with the integration of AI and machine learning. Emotional analysis is now being used to improve diagnostic accuracy. Cameras can capture a patient’s facial expressions and AI systems analyze these cues to detect emotions in real time, providing enhanced insights for clinicians.

A recent study found that patients considered emotional recognition valuable in virtual mental health care. However, they were less comfortable with the idea of having their emotions recorded, whereas consultants were more receptive to tools that enhance patient care.

Robots are advancing beyond telehealth chatbots to become smart companions for older adults.

ElliQ, an AI-powered robot, was deployed by 15 U.S. government agencies to support seniors facing loneliness. It received positive feedback for being engaging and enhancing well-being. ElliQ uses voice, sound and light to hold conversations, play music, make video calls and provide cognitive games, stress relief and health reminders. Its empathetic responses are key to improving care quality and easing social isolation among older people.

One of the most advanced uses of AI is in customer service. By 2025, 85% of the industry is expected to adopt generative AI. However, critics argue that chatbots would never be able to distinguish between human frustration and satisfaction as they lack the emotional insight to grasp the underlying meaning. However, this gap is closing faster than expected.

Emotionally aware voice bots can now detect irritation or confusion and either adjust their tone or preemptively escalate the issue to a human agent. Ongoing analysis of emotional signals also enables real-time coaching, improving empathy in service with each interaction.

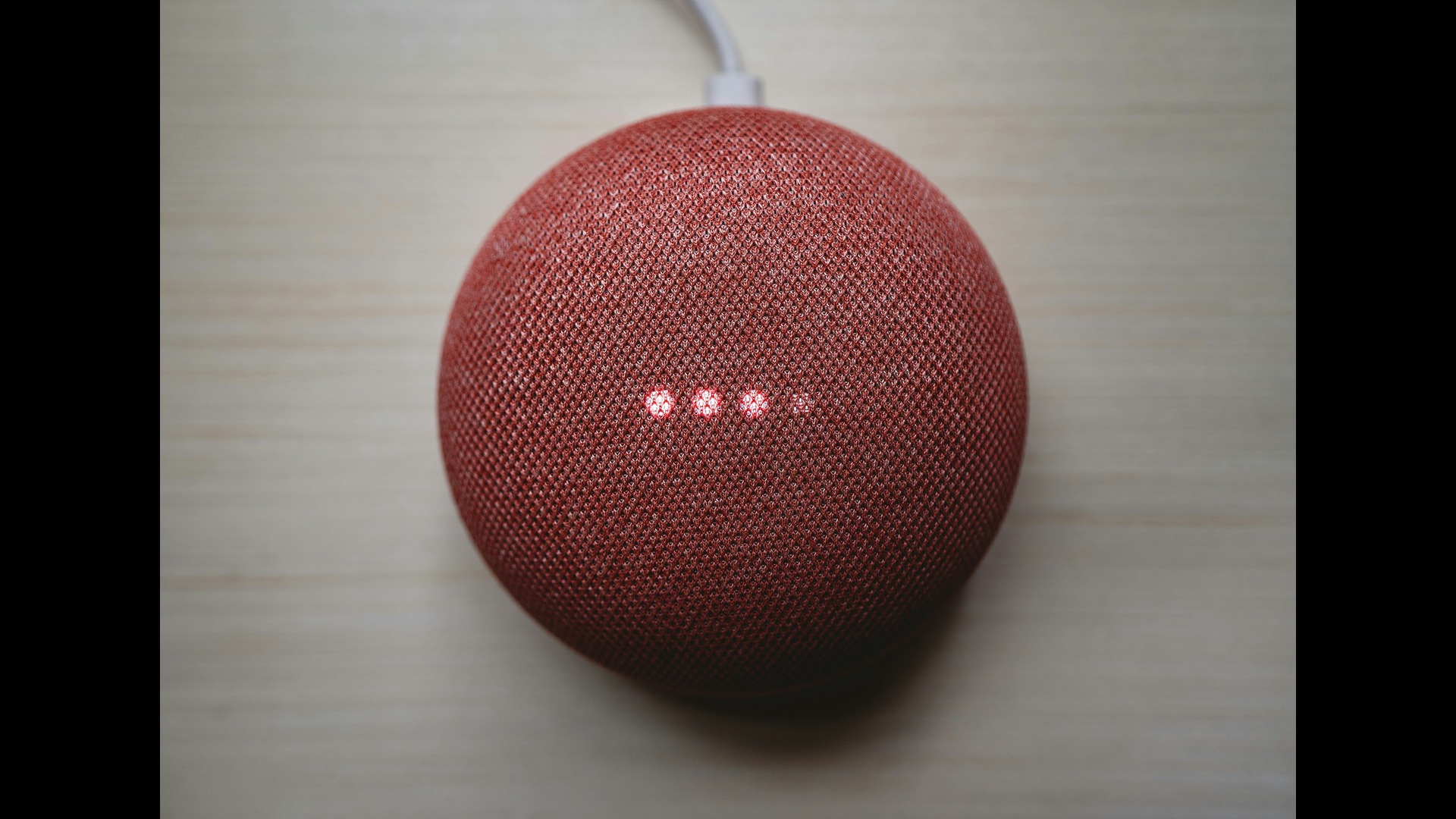

Voice assistants in smart home devices often use flat, monotone speech due to reliance on text-to-speech technology. While some users appreciate the consistency, efforts are now being made to deliver more personalized and emotionally responsive interactions for a more engaging experience.

Responses can adapt to the user’s emotional state — for instance, if stress is detected in their voice, the assistant might suggest calming music or relaxation routines. This benefits the 157.1 million voice assistant users in the U.S., enhancing user engagement and overall well-being through integrated wellness features.

Amazon Alexa had used emotion recognition since 2019 when it introduced an emotion tag that enables the voice assistant to express feelings during interactions. This feature allows Alexa to respond more naturally and intuitively. It is currently available in four languages — U.K. English, U.S. English, German and Japanese. Alexa can convey emotions such as excitement or disappointment based on the context of the conversation.

Emotional AI is also making its way into educational technologies by helping detect signs of frustration or boredom in learners. While it may sound like something from a sci-fi movie, voice-enabled tutors can adjust pacing and delivery or offer encouragement based on a student’s emotional state. For users with disabilities, emotionally responsive voice interfaces create a more natural and supportive communication channel, improving accessibility.

In Japan, toymakers are exploring toys that adapt to play or offer comfort if sadness is detected in a child’s voice. However, privacy concerns remain due to the potentially intrusive nature of monitoring children’s emotions with AI.

Safety-critical systems in vehicles are also benefiting from advances in emotional AI. By analyzing a driver’s voice for signs of stress or fatigue, these systems can issue alerts or adjust settings to help prevent accidents.

Toyota Motor Corporation was among the first to introduce a concept car featuring emotion-aware AI. This system monitors a driver’s emotional and physical condition using voice, facial expressions and physiological cues. If the driver appears stressed or sad, the car may suggest a more scenic route. It can also detect drowsiness through eye and head movements or blinking patterns — responding with loud sounds, blasts of cold air or prompting the driver to pull over.

Paired with emotion-responsive voice assistants, solo drivers may feel like they have a companion reminding them to rest and stay safe. Research shows that emotionally aligned AI assistants can improve driver focus and reduce accident risk, particularly in smart vehicles.

AI holds great potential, but integrating emotional recognition into voice systems introduces significant challenges and risks. Emotions vary across languages, cultures and contexts, making it difficult to ensure accuracy and avoid bias. Studies have shown that some systems incorrectly identify Black faces as angrier than their white counterparts, highlighting racial bias stemming from unbalanced training data.

There are also concerns about emotional manipulation, where detected feelings could be exploited for targeted ads or persuasive tactics. Since emotional data is considered sensitive biometric information, it raises cybersecurity and privacy issues. Systems must comply with regulations like the GDPR, the California Consumer Privacy Act and broader ethical standards.

Moreover, deep learning models often operate as “black boxes,” making their decision-making difficult to interpret. This lack of transparency can erode trust. Ongoing efforts toward explainable AI are essential to improve clarity and accountability.

Harnessing emotional intelligence marks a major step toward making artificial intelligence more relatable and intuitive, but it brings significant challenges. As more voice-controlled technologies evolve from static command-based tools into emotionally aware conversational partners, users are beginning to value the benefits of more human-like interactions with machines.

These systems are no longer just reactive — they’re beginning to anticipate and adapt. However, this progress must be cautiously approached to ensure AI serves human well-being rather than corporate interests or reinforcing harmful biases. As AI engages more deeply with human emotions, safeguarding privacy and security is more critical than ever.

Revolutionized is reader-supported. When you buy through links on our site, we may earn an affiliate commision. Learn more here.

This site uses Akismet to reduce spam. Learn how your comment data is processed.