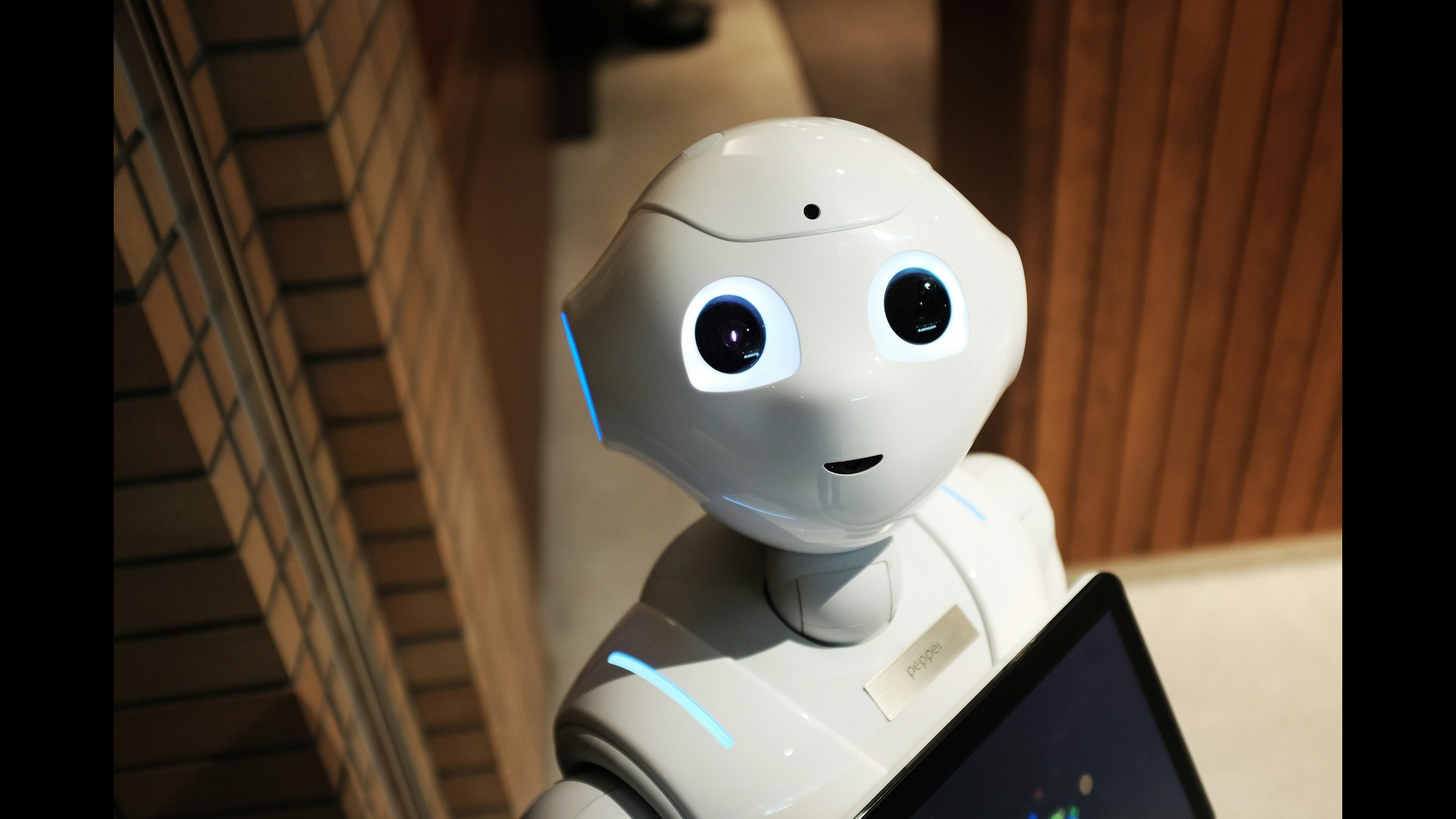

The Role of Digital Ethics in Next-Gen Robot Development

October 2, 2025 - Lou Farrell

Revolutionized is reader-supported. When you buy through links on our site, we may earn an affiliate commission. Learn more here.

Robots operate alongside clinicians, technicians and drivers. The complex problem is judgment — not capability. Digital ethics sets rules for data use, autonomy limits and human override and then ties those rules to specs, tests and logs. Clear guidance and field-tested controls help teams reduce harm, meet law and earn trust without slowing delivery.

What Digital Ethics Means in Robotics

Digital ethics sets non-negotiables for how robotic systems handle data, decide actions and affect people. It covers safety, privacy, fairness, transparency and accountability, then binds those principles to requirements, interfaces and audits that engineers can implement.

In practice, teams translate values into sensing, memory and control constraints. They make tradeoffs explicit, document risk and verify behavior with scenario tests. This work treats people as stakeholders — not inputs — and keeps humans responsible for the outcomes the system enables.

Digital Ethics Belongs in the Pipeline

High-performing teams write ethics into architecture and continuous integration. They define harms, add countermeasures, test for failure, then instrument the field to learn and patch. That approach matches how human-robot interaction steers attention in real time.

A 2025 review describes how immediacy, simultaneity and ephemerality can quietly mobilize engagement mechanisms through “phenomenal algorithms,” which robotics teams must anticipate in design.

Regulation Forces Design Choices

The EU AI Act codifies a risk-based approach with obligations for many robotic systems, including risk management, data governance, logging, human oversight and post-market monitoring. Selling into the EU requires compliance artifacts that live next to code and tests.

In the United States, there is no single AI statute. Federal policy leans on NIST’s voluntary AI Risk Management Framework, and executive direction has shifted — from Executive Order 14110 (2023) to a 2025 order that rescinds and replaces much of it — so obligations vary by sector and agency.

States are filling gaps. Colorado’s 2024 AI Act is the first broad state law targeting high-risk AI, adding duties for developers and deployers that will touch robotics vendors.

Safety and Moral Responsibility in Human-Robot Teams

Healthcare offers a clear bar. Scholars argue for nonmaleficence — do no harm — and for a partnership principle. Clinicians who use robots must ensure the tool aligns with their ethical duties, and they cannot offload moral responsibility to a device. Designers should reflect that stance in overrides, audit trails and clear handover states.

Why Digital Ethics Matters in Next-Gen Robots

Robots make fast micro-decisions in messy environments. Ethics moves those decisions from implicit defaults to explicit design. Development teams that treat ethics as a product requirement reduce incident volume, accelerate approvals and maintain user trust.

This focus also clarifies why digital ethics is important for sensitive domains like elder care, education and public space. Predictable behavior and visible guardrails help communities accept useful automation without accepting hidden costs.

Emotional AI and Grief Tech Need Strict Boundaries

Agents and social robots already operate in emotionally charged contexts. A CHI 2023 study found mourners using companion chatbots and griefbots that simulate the deceased to buffer anxiety, depression or to maintain an imagined relationship.

Features like memory retention and persona simulation demand explicit, revocable consent, role-based access and simple off-switches for users and families.

Isaac Asimov’s laws of robotics even presume that robots make moral choices and take action based on these. Real systems do not — code and training data drive behavior. Effective governance assigns duties to designers, deployers and operators, specifies required safeguards and documentation, and then verifies compliance with tests and audits.

Security Threats Also Target Robots

Attackers use AI to spin up convincing prompts, clone voices and impersonate operators at machine speed. They mimic social cues that trick staff into authorizing actions or leaking data faster than human phishers.

That said, developers should treat every human-robot interface as an attack surface and assume spoofed operators, prompt injection and telemetry tampering. They should also require strong identity checks for any action that moves money, opens doors or dispenses medications, as well as sign commands end-to-end and issue alerts on anomalous motion or network traffic.

Creative work also requires provenance and respect for human meaning. Generative AI systems imitate style — not lived experiences. This gap justifies provenance, consent and compensation in creative pipelines that mix robots, cameras and models. Track training data, watermark outputs and license content, and give contributors a straightforward way to opt out or get paid.

Changes in Jobs and Skills — and How to Respond

Robot deployment keeps rising. The International Federation of Robotics reported 4.28 million industrial robots in service worldwide as of 2023, with installations above half a million for a third year — led by Asia. The World Economic Forum projects that by 2027, employers will eliminate 83 million jobs and create 69 million, leaving 14 million fewer roles worldwide. Treat that gap as a planning assumption and fund reskilling and redeployment accordingly.

Employers and platforms highlight upskilling in AI literacy, AI preparedness, data analytics and prompt engineering, so managers must budget time and money for training with each deployment. Human-robot interaction also steers attention and emotion in real time.

Research shows that immediacy, simultaneity and ephemerality can hook users through “phenomenal algorithms.” Teams should measure how the system captures attention, then set guardrails for timing, prompt cadence and reward signals. For example, cap notification frequency, pause noncritical prompts during high-risk tasks and throttle variable rewards when operators show signs of overload.

Why Digital Ethics Is Important for Public Trust

Communities accept robots when they see safety, transparency and recourse. Clear definitions, repeatable tests and visible controls signal respect, so publish incident reports, name an accountable owner and give users a straightforward way to override or appeal decisions. Because attackers exploit trust gaps, connect these practices to security, not just ethics.

Broader risk reporting puts mis- and disinformation near the top of global concerns and shows how AI accelerates social engineering. Deployers should assume attackers will spoof operators or inject malicious prompts at human touchpoints, then engineer fail-safe modes that degrade gracefully under attack.

Clear Stance on Responsibility in Robotics

Treat responsibility as an engineering goal with owners, budgets and deadlines. Put digital ethics in the repo and CI, not the slide deck. Ship measurable changes each release — a kill switch that works in every mode, a signed control plane, auditable decision logs and rate-limited prompts during high-risk work. Publish incident reports fast and give users a clear override and appeal. Fund upskilling so operators and maintainers keep pace as autonomy grows. Teams that follow this path earn trust, face less regulatory friction and win adoption in the real world.

Revolutionized is reader-supported. When you buy through links on our site, we may earn an affiliate commission. Learn more here.

Author

Lou Farrell

Lou Farrell, Senior Editor, is a science and technology writer at Revolutionized, specializing in technological advancements and the impacts on the environment from new developments in the industry. He loves almost nothing more than writing, and enthusiastically tackles each new challenge in this ever-changing world. If not writing, he enjoys unwinding with some casual gaming, or a good sci-fi or fantasy novel.